Luddite Houses

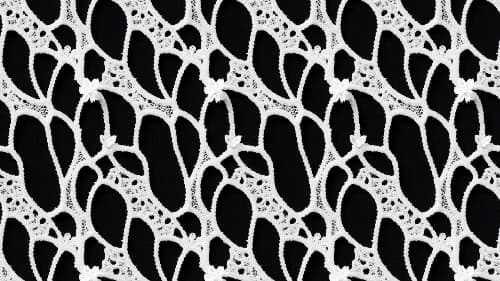

As the story goes, Luddites were members of secret organisation in early 19th century. Their agenda was rebelling against machines. They rebelled because machines were taking livelihood to which they were accustomed to. The machines did better, faster, cheaper laces that no human weaver could compete with. Today, with AI understanding deeper and broader data and more complex context that any human architect could reference to, the machines are changing the livelihood we are accustomed to. As architects we are trained to be imaginative, creative, visionary. Now the AI is being trained to be capable of it too. Being creative, imaginative and visionary, we architects at collcoll.cc are rejoicing this opportunity to bring AI into our toolset. This is Luddite Houses, the tribute to history repeating itself in different context, layering and only to be interpreted after being experienced. In the vision of Luddite Houses, we imagine archetypal typology of family houses as structures being obscured with custom metal facade interpretation of lace. created with Midjourney Notes: The English vibe of the environments was not prompted at all, but probably the AI itself empathises with the English lace makers of 19th century and understands the context of history? The black and white thumbnail has been generated in Midjourney using --tile function and such b&w bitmaps can be transformed into inputs for metal sheet punching or laser cutting. Luddites:

FROM IDEA TO DESIGN

CUSTOM WORKFLOW ARCHITECT + AI Capable AI in architectural process can take many forms and it is with us for a while... But only last few months there was an enormous boost in number of architects using algorithms with powerful diffusion models. Because it finally FEELS creative! Also because the almost immediate sensory feedback triggers visual gluttony. But how should an architect talk to such algorithm? For me it was important to work with what I see as the algorithm's strong attribute - contextual and referential layering. In the workflow described below the layering and referencing is (similarly to how diffusion works) driven from abstract to concrete. The simple workflow using "remix" feature of Midjourney I found work for me is as follows: 1st generation :: concept image :: abstract / conceptual non-architectural element is rendered in realistic environment 2nd generation :: typology image :: the conceptual element becomes architectural typology by changing part of the prompt referencing the subject 3rd generation :: final result :: adding a reference imagery - existing building - guides the algorithm to deliver realistic depiction of architecture with respect to the style of provided imagery - In my workflow I use images of buildings that was designed in my studio collcoll.cc to provide an output in respect to our style and taste. This workflow is repeatable and reliable to provide me with inspiration as it is based on iterating multiple aspects - the conceptual prompt - the training architectural dataset of algorithm - and our own architectural experience and taste.